Modeling Rhythmic Attributes

Overview

Musical meter and attributes of the rhythmic feel such as swing, syncopation, and danceability are crucial when defining musical style. In this work, we propose a number of tempo-invariant audio features for modeling meter and rhythmic feel. Evaluation is performed using the popular Ballroom dataset as well as Pandora’s Music Genome Project® (MGP) .

Feature Design

Figure 1. Each of the rhythmic features rely on an accent signal and its autocorrelation, estimates of tempo, and estimates of beats.

The beat profile feature shows the average emphasis of the accent signal between two consecutive beat estimates. A tempogram is simply an autocorrelation, but the lag axis converted to a tempo interval in beats per minute. The tempogram ratio feature is a compact representation of the tempogram, showing values that correspond to musically relevant rhythmic ratios anchored on an estimate of tempo. The mellin scale transform is a measure of signal stability. It is the Fourier transform of a log-lag autocorrelation of the accent signal. Taking the DCT, (similar in motivation to MFCCs) creates a sparse, decoreelated representation of this transform.

Figure 2. Samba, Tango, and Jive examples are shown for each of the rhythmic features.

Experiments

Two experiments were performed with the presented features. The first evaluation uses the popular Ballroom dataset in order to classify both Ballroom Dance style and duple vs. triple meter. The second task models some of the rhythmic attributes contained within Pandora's Music Genome Project.

Figure 3. Outline of the experiments performed.

Data: The Music Genome Project

The Music Genome Project contains 500+ expert labels across 1.2M+ songs. We chose to model the following 9 attributes of meter and feel:

- Cut-Time: contains 4 beats per measure with emphasis on the 1st and 3rd beat. The tempo feels half as fast.

- Compound-Duple: contains 2 or 4 sub-groupings of 3 with emphasis on the 2nd and 4th grouping. (6/8, 12/8)

- Triple: contains groupings of 3 with consistent emphasis on the first note of each grouping. (3/4, 3/2, 3/8, 9/8)

- Odd: identifies songs which contain odd groupings or non-constant sub-groupings. (5/8, 7/8, 5/4, 7/4, 9/4)

- Swing: denotes a longer-than-written duration on the beat followed by a shorter duration. The effect is usually perceived on the 2nd and 4th beats of a measure. (1 . . 2 . a 3 . . 4 . a)

- Shuffle: similar to swing, but the warping is felt on all beats equally. (1 . a 2 . a 3 . a 4 . a)

- Syncopation: confusion created by early anticipation of the beat or obscuring meter with emphasis against strong beats.

- Backbeat Strength: is the amount of emphasis placed on the 2nd and 4th beat or grouping in a measure or set of measures.

- Danceability: the utility of a song for dancing. This relates to consistent rhythmic groupings with emphasis on the beats.

Results

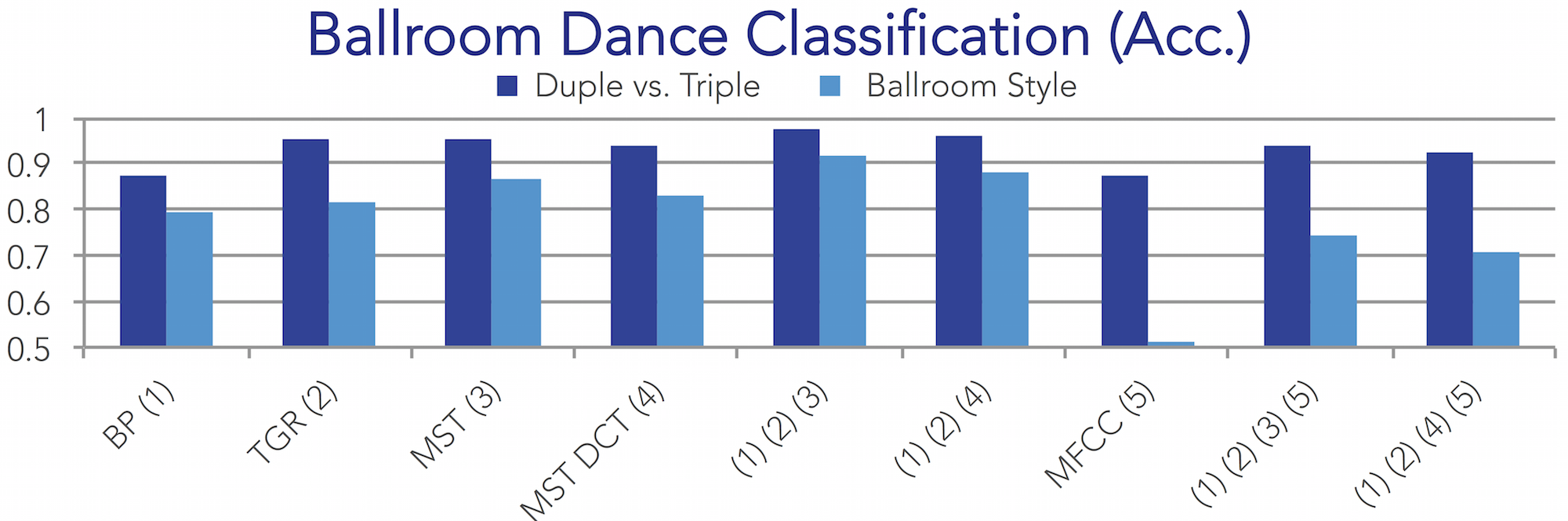

Figure 4. Classification accuracies for classifying the meter and style of ballroom dances.

Figure 5. Modeling rhythmic attributes of the Music Genome Project.

Conclusion

- The descriptors are able to distinguish meter and rhythmic styles with SotA performance.

- The descriptors scale to very large datasets and capture rhythmic components that define style.

Follow-up

As a followup we also performed these same experiments using tree ensembles. That work can be found in the proceedings of the Machine Learning for Music Discovery Workshop at the 32nd International Conference on Machine Learning. See reference in the "Cite This Work" section. [PDF]

References

- S. Böck and G. Widmer, “Maximum filter vibrato suppression for onset detection,” DAFx, 2013.

- D. P. W. Ellis, “Beat Tracking by Dynamic Programming,” Journal of New Music Research, vol. 36, no. 1, pp.

51–60, Mar. 2007. - A. Holzapfel and Y. Stylianou, “Scale Transform in Rhythmic Similarity of Music,” Audio, Speech, and

Language Processing, IEEE Trans., vol. 19, no. 1, pp. 176–185, Jan. 2011. - G. Peeters, “Rhythm Classification Using Spectral Rhythm Patterns.” ISMIR, 2005.

Cite This Work

- Prockup, M., Asman, A., Ehmann, A., Gouyon, F., Schmidt, E., Kim, Y., Modeling Rhythm Using Tree Ensembles and the Music Genome Project. Machine Learning for Music Discovery Workshop at the 32nd International Conference on Machine Learning, Lille, France, 2015. [PDF]

- Prockup, M., Ehmann, A., Gouyon, F., Schmidt, E., Kim, Y., "Modeling Rhythm at Scale with the Music Genome Project." IEEE Workshop on Applications of Signal Processing to Audio and Acoustics, New Paltz, New York, 2015. [PDF]